My Two Cents On TFS Build Server Best Practices

Update August 2016: If you can, use the VSTS Hosted Build Service. If you can’t, then you’d be surprised to know that the Hosted Build Service uses the D2_V2. Which is 2 core, 7GB of RAM. For 1 build agent. So you know…. something to consider there….

(Update 2014: below I mention that 2GB per agent is a good starting point. This is probably not enough if you’re publishing/scripting database changes using sqlpackage.exe, particularly if one of the databases is a multipartitioned data warehouse and you plan on having multiple builds running simultaneously. I’d say 4GB, if you can afford it, per agent for this scenario).

It’s amazing how important build servers are to a team working in Scrum; the team relies on a fast build server that can build and deploy code quickly. A CI Build gives the devs feedback that the code is good to be deployed to the test environment. And the testers rely that the build deploys to the environment rapidly. If it takes 10 minutes to run a CI build and 20 minutes to deploy to a test environment, that’s half hour spent on waiting to test a new feature. Over the period of a sprint, many builds are run, which leads up to a significant amount of time waiting on builds. Invariably the developers and testers whinge that the builds are taking too long, and the boss comes up and asks you to find out why they’re taking so long and speed them up. So based on my experiences here are my thoughts on how you can check that you’ve optimized your builds and build servers as much as you can.

You can add more Build Agents to a Build Controller: If you have CI builds then install Build Controllers and Build Agents on a box separate from the TFS Application Tier. Create one Build Agent for every core on your server. If you find that the performance suffers from having all agents running builds, then you can disable one build agent, but it’s better to have it and not want it than to need it and not have it.

[caption id=“attachment_496” align=“alignnone” width=“456”]

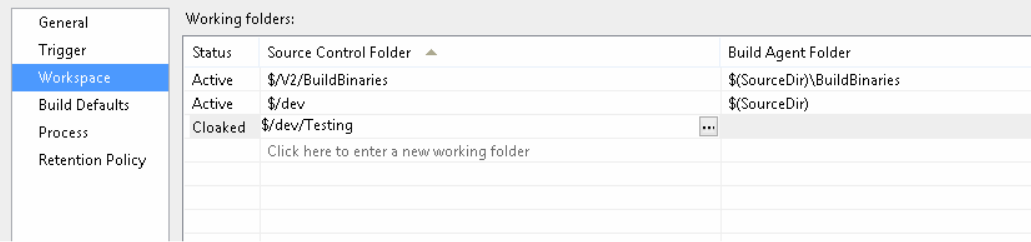

Create separate disks for each Build Agent: lots of IO happens in a build, particularly at the beginning and the end. Our boxes suffer as we have 1GB iSCSI connections to the SAN, but having 4 separate disks gives us marginal gains in the speed of the builds when we have more than one build running at the same time. Save space on your disks and cloak any files/folders you don’t need, and now that you have extra space on your disks, use that saved space to increase the number of builds you retain, meaning you can track where a build went wrong. If you’re smart and you followed the MSDN article on how to set up your build agents you will have installed Visual Studio 2012 or whatever IDE the devs use, meaning you can compile through the IDE, which sometimes gives errors the build output just won’t give you regardless how many times you build. Here is the link to the build agent set up, but there are many more links w/r/t the best practices for setting up your build service. Read first, then deploy. http://msdn.microsoft.com/en-us/library/vstudio/bb399135.aspx

Build Servers that are servicing the Build Controller service only do not require blazing disks or processors, but do require some RAM: the minimum requirements for the host OS will suffice as a starting point. If you want to speed up your builds, then you DO need your Agents to have blazing processors and disk. RAID 0 SSD’s make a huge difference! Seriously though a build will run by getting the latest code before compiling, and after compiling will move all that compiled folder from one location on the disk to another. If you have a considerable amount of dat shiftng around then you need to rely on fast IO and mitigate this as a bottleneck. w/r/t to RAM, I have found that 2GB of RAM per agent is a good measure for how much your box will need. Parallelize tasks where you can: unit tests, deploying databases in parallel. With this you need to get acquainted with how to do this using MSBuild or Workflow builds. MSBuild still seems to be the popular choice, and I recommend picking up the book Inside the Microsoft® Build Engine: Using MSBuild and Team Foundation Build

Regardless of whether you use Workflow or the old .proj files, at the core of all builds MSBuild is used to compile your code. MSBuild will try to compile in parallel where it can, but you can increase the number of processes used in your build by adding the /m:n parameter (replace n with the number of processes you want to spin up for this build) to your build. now there are a number of caveats to using this: If you have followed the guideline of one Agent per core, and you set the number too high for all the builds, then you’re going to kill your servers performance. If you have lots of references to other solutions, this can caused locked dlls and consequently failed builds, plus lots of dependencies in the solutions means that MSBuild will not be able to compile many solutions in parallel, so use this wisely. Plus this gives you evidence that it’s not the build that is slow, but that the solutions needs re-configuring for faster builds.

MSBuild has other parameters that can help speed up builds. I typically use these arguments: /m:1 /p:TrackFileAccess=false;BuildSolutionsInParallel=false;IncrementalBuild=false /nr:false.

BuildInParallel allows the MSBuild task to process the list of projects which were passed to it in a parallel fashion, while /m (/maxcpucount) tells MSBuild how many processes it is allowed to start. The /nr flag enables or disables the re-use of MSBuild nodes. A node corresponds to a project that’s executing. If you include the /m switch, multiple nodes can execute concurrently. You can specify the following values:

I strongly urge you to try each potential time saving setting out individually on your builds as they are far from perfect; as I mentioned, if you have many dependencies, building solutions in parallel can cause lots of locking dlls. If you only have a four core machine, and you have an agent per core, and you set the /m switch too high, you can negatively impact performance greatly.

So there you have it, unsurprisingly the most important thing is to have a super fast server with fast network access and a well designed set of solutions that don’t have many inter-dependencies, and these things are probably out of your control. But if you do experiment with the flags and cloaking, and making sure that you’re not overloading your servers with activities it just can’t cope with, you might be pleasantly surprised by just how fast your builds increase.