Choosing the Right Processor for your SQL Server Workload

Last year, we planned to move our data warehouse from a 2 socket server to a 4 socket server that was left in the data centre from an abandoned project to virtualize our desktops.

To cut a long story short; the performance was far worse, despite more RAM, more cores, and attached to a DAS via SAS.

The first thing we understood was that increasing the RAM would not make a lot of difference. The data warehouse is over 6TB, and if we have multiple importing jobs running, plus all the temp tables we use when switching in partitions, and all the selects being run when processing cubes at the end of the ETL phase, that 64GB extra RAM isn’t really going to help a great deal.

We were concerned that the DAS was not performing as we’d hoped, as it was one of the big changes we’d made. However through firing up the perflogs and checking the appropriate counters we could find no issues.

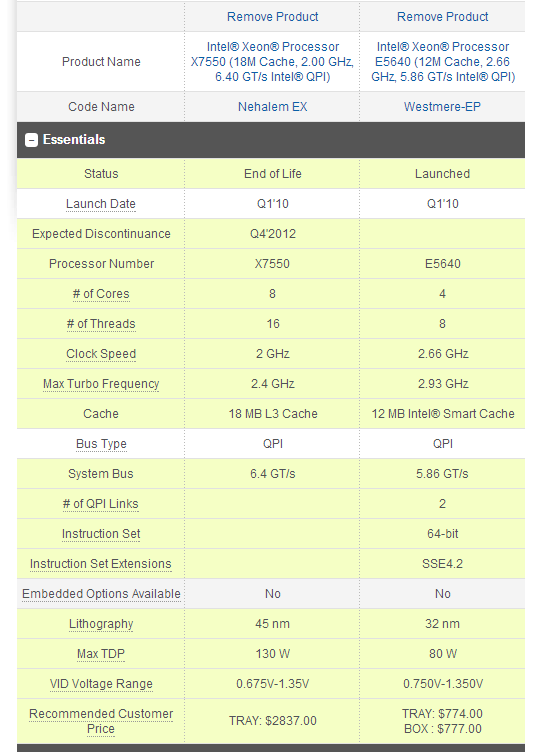

One of the things that we noticed was that the new 4 socket server had a slower clock count than the 2 socket machine. Could this be the source to our perceived performance drop? After a bit of investigation, and testified by Glenn Berry at SQL Performance, a larger server is not necessarily a faster server:

http://www.sqlskills.com/blogs/glenn/a-sql-server-hardware-tidbit-a-day-day-4/

For a full compare, follow this link: http://ark.intel.com/compare/46498,47923

So we wrote a PowerShell script that loops over calculating sine and returns the duration. Run the script below and you will get an accurate reflection of how long it takes a single core calculation to run.

powershell.exe -sta

$sw = [Diagnostics.Stopwatch]::StartNew()

$i=1

do{[math]::sin(1); $i++}

while ($i -le 10000)

$sw.Stop()

$sw.Elapsed

Based on the results we got back from the machines we noticed that the R910 was slower than the R610, by about 30%. Coincidentally the actual performance of the R910 with SQL was about 30% worse than the R610, so the numbers matched up. It was clear that the size of our workload benefited from faster cores with a decent capacity than slower cores with a larger capacity. So we attached the DAS to the old server, and the other server is used as a Hyper V host, which Ops were very happy about as they thought they were going to get the older machine, with less cores and RAM. We felt that maybe the extra L3 cache would have helped for a data warehouse workload, and maybe for heavier loads this would hlep, but again for our workload we’d need a processor with a faster clock speed than larger cache at this juncture. If we were to upgrade now, we’d stick with a two socket server, and if I had the luxury to test, I’d start with the Xeon E5-2690, which has eight-cores plus hyper-threading clocked at 2.90GHz (Turbo Boost at 3.8GHz), and the L3 cache set at 20MB: same amount of cores for licencing, faster clock and larger L3 cache.

I’m sure that for some people, 4 sockets is the way to go as a 2 socket machine couldn’t cope with the capacity of their workload. And for us, we assumed our workload would benefit from more cores. But it was clear from our findings that the current 2 Socket box with faster cores was better suited to our workload than a 4 socket behemoth. It also saved us a lot of money in terms of licensing as SQL Server Enterprise is licensing model is core based.